IS200ISBBG2AAB | GE | Bandwidth provided by interconnection

¥7,710.00

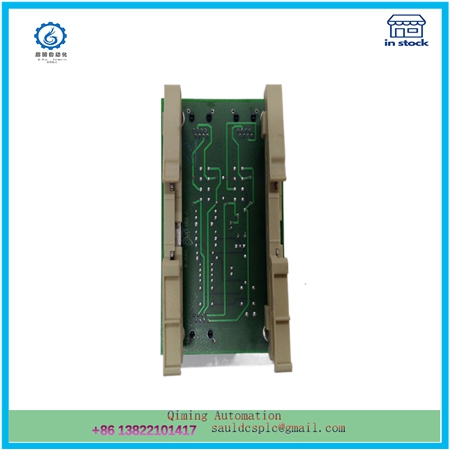

🔔Module Number: IS200ISBBG2AAB

⚠️Product status: Discontinued

🏚️Delivery time: In stock

🆕Product status: 100% new

🌍Sales country: All over the world

🥇Product situation: one year warranty

📮Contact me: Sauldcsplc@gmail.com

💬Wechat/Whatsapp :+86 13822101417

☀️Have a good day! Thanks for watching my website!

Description

IS200ISBBG2AAB | GE | Bandwidth provided by interconnection

- .Many products are not yet on the shelves please contact us for more products

- .If there is any inconsistency between the product model and the picture on display, the model shall prevail. Contact us for the specific product picture, and we will arrange to take photos in the warehouse for confirmation

- .We have 16 shared warehouses around the world, so please understand that it can sometimes take several hours to accurately return to you. Of course, we will respond to your concerns as soon as possible

If we pause adding faster computing engines to machines for two or even four years, the server design will be much better. In this way, we can enable the memory subsystem and I/O subsystem to catch up and better utilize these computing engines, while also requiring fewer memory groups and I/O cards to meet the needs of these computing engines.

In fact, the transition from 8 Gb/s PCI-Express 3.0 (whose specification was released in 2010) IS200ISBBG2AAB to 16 Gb/s PCI-Express 4.0 interconnect (which was supposed to be released in 2013) was delayed for four years until 2017 when it entered the field. The main reason is that there is an impedance mismatch between the I/O bandwidth that the computing engine truly needs and the bandwidth that the PCI-Express interconnect can provide.

This mismatch continues, resulting in IS200ISBBG2AAB always falling behind. This in turn forces companies to provide their own interconnections for their accelerators, rather than using the universal PCI-Express interconnect, which can open server designs and provide a fair I/O competitive environment. For example, Nvidia created NVLink ports, NVSwitch switches, and then NVLink Switch structures to connect the memory on the GPU cluster together, and ultimately connect the GPU to its “Grace” Arm server CPU. AMD has also created Infinity Fabric interconnect to connect CPUs together, and then connect CPUs to GPUs. This interconnect standard is also used internally within CPUs to connect small chips (chiplets).

-

📩Please contact us for the best price. Email: 【sauldcsplc@gmail.com】

-

🌐For more products, click here

📎📝Mailbox:sauldcsplc@gmail.com |IS200ISBBG2AAB

www.abbgedcs.com | Qiming Industrial Control | Simon +86 13822101417

Reviews

There are no reviews yet.